What Is a Coding Agent? Comparing Agents to AI Code Assistants

8 min read

Written by

Mathew Pregasen

Published on

January 21, 2026

Traditional code completion tools focus on small-scale productivity, accelerating syntax recall and micro-refactors within a single file. However, as LLMs improve and systems scale, the primary developer bottleneck shifts from writing lines of code to coordinating complex tasks across multiple files and testing environments.

Agentic coding addresses this new bottleneck by offloading the planning and execution of multi-step workflows, moving the paradigm from "coding with" an assistant to having an agent "code for" the developer. While recent studies of Python-proficient developers show this approach can slash manual effort by 50%, the high level of "babysitting" currently required for oversight means that total wall-clock time remains similar to traditional methods.

Code Completion vs. Agentic Coding

Traditional Code Completion

Traditional code completion tools, often called "assistants" or "Copilots," operate as stateless models (typically transformers) that predict the next series of tokens based on the developer’s immediate context. These tools function through a reactive suggestion loop, providing inline completions and syntax help as the developer types. By automating these routine micro-tasks developers typically face, code completion tools significantly reduce the cognitive load of syntax recall while allowing the developer to maintain synchronous, granular control over the file.

However, their utility is generally restricted to local function-level or file-level context rather than broader architectural awareness. Ultimately, code completion is best viewed as an intelligent pair programmer that suggests the next line while developers remain the primary driver.

What is Agentic Coding?

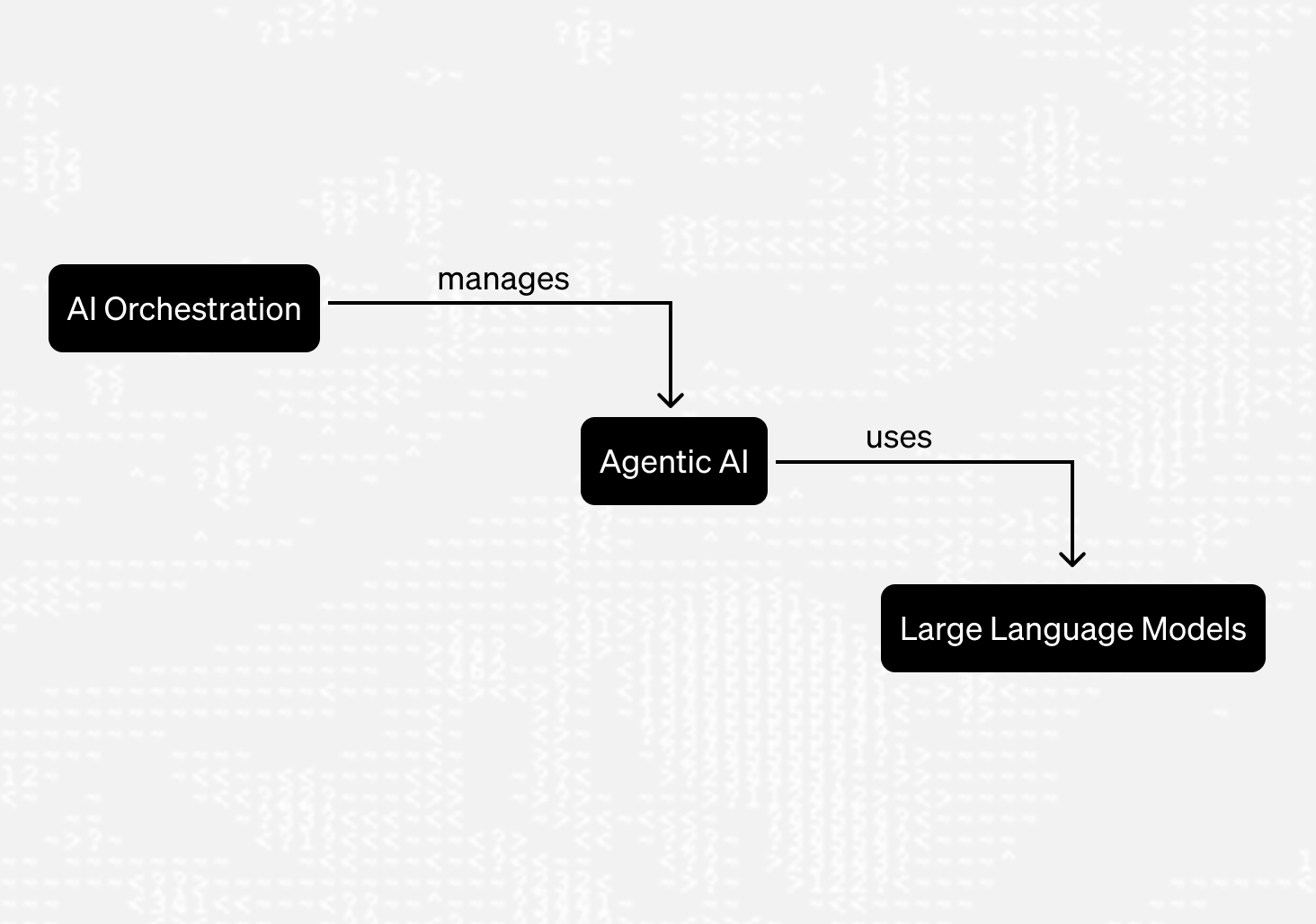

Agentic coding shifts the goal of LLM systems from reactive suggestions to autonomous task orchestration. These AI agents interpret high-level goals and execute workflows across entire files, codebases, tests, and environments. Unlike completion tools, agents maintain persistent state and project-wide awareness, allowing them to move through a "plan → code → test → pull request" cycle with minimal human intervention.

This capability is powered by specialized agent modes within IDEs or multi-agent ecosystems where different models handle discrete roles, much like an assembly line, to ensure code quality and system integration. In practice, a developer might assign a specific issue or feature card to an agent, which then autonomously navigates the repository to implement the change.

As a metaphor, agentic coding is akin to hiring a modular development studio to build a complete feature based on a high-level goal, leveraging a team of specialized individuals to deliver the final product. Like a development studio, agentic systems are inherently modular, utilizing different "members", or agents, to handle discrete roles such as planning, implementation, and quality assurance. However, the comparison diverges in practice: while human developers can typically generalize well, agentic ecosystems operate more like a hyper-specialized assembly line where each agent is a rigid unit focused on a narrow, discrete task.

Differences in Architecture and Autonomy

When to use Agents over Code Completion

Agents are better for most tasks

Agentic coding excels when work spans multiple steps or files, given that the outcome can be expressed as a clear objective. This includes tasks such as generating or refactoring modules and executing broad architectural changes. Agents are particularly effective for repetitive backlog work like large-scale test generation, where consistency and throughput matter heavily. In enterprise settings with standardized practices and well-defined goals, agentic systems can execute work in parallel across issues or pipelines, producing reviewable artifacts that integrate cleanly into existing processes.

... but code completion may be better for rapid development

Copilot-style coding is still the better choice for small, iterative edits where human judgment and situational context are most important. It works well for rapid prototyping and exploratory coding directly in the IDE, where developers are actively shaping ideas and adjusting direction in real time. It is also effective for learning new libraries, since quick inline suggestions can reinforce correct usage without taking control of the workflow. For fine-grained tasks that require constant feedback and iteration, autonomous agents would add unnecessary overhead.

Research shows agents are very likely to save you time

An academic study at Carnegie Mellon University found that autonomous agents enable developers to complete complex tasks that are often unachievable with completion tools alone, providing early empirical evidence of reduced manual effort. This shift toward increased autonomy allows engineers to move away from token-level micro-management and focus instead on higher-level task execution in complex development scenarios.

While the research noted a caveat — agents can be harder to understand because they provide less visibility into their internal processes — this is viewed as a temporary interpretability challenge. As agentic systems improve their ability to provide transparent summaries of their strategies and changes, they are poised to become the preferred strategy for efficient development.

Risks

While the primary hurdle for standard assistants is often just getting the whole team to adopt them, they ultimately offer a much lower ceiling for effort reduction than their agentic counterparts.

Autonomous agents, on the other hand, introduce higher-stakes architectural risks. The most significant concern is the potential for agents to introduce non-obvious defects across a codebase that evade local reasoning. When using coding agents, robust automated testing and strict CI/CD pipelines are crucial for maintaining system integrity. Because agents also require broad access to repositories and build tools, careful permission scoping is essential to limit the damage of an unintended action or misconfiguration.

Without explicitly structured goals, agents may also suffer from "context blindness," producing code that appears syntactically correct but fails to account for implicit domain assumptions or high-level intent. To maintain traceability, agent-generated pull requests must adhere to standard human conventions, like focused commits and staging reviews, to ensure they integrate cleanly into existing version control workflows.

Finally, while success can be tracked via metrics like pull request approval and test coverage, teams should consult emerging frameworks like the Agentic Coding Principles for a structured path forward.

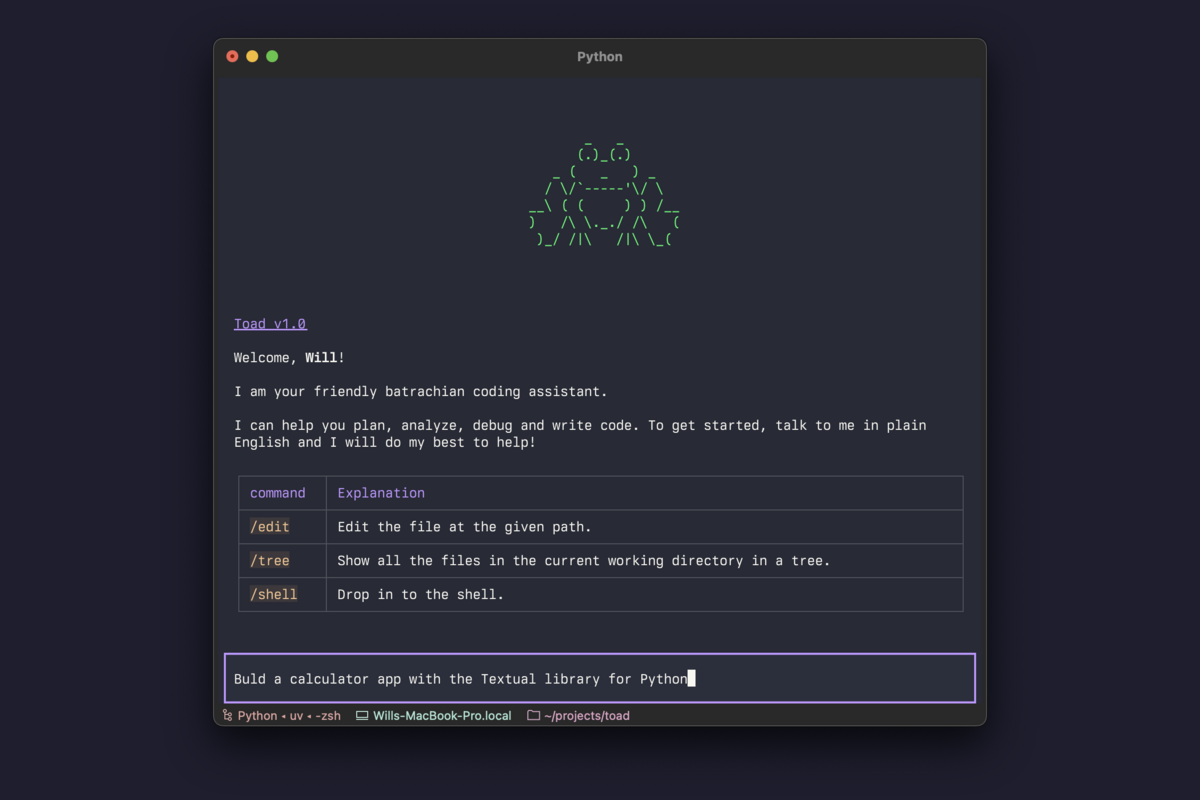

Tooling Landscape

Standard tools like GitHub Copilot are great for quick IDE-level suggestions, but the industry is rapidly pivoting toward a more powerful class of agentic tools, like Cline, Continue, and Roo Code, that can autonomously handle complex work across multiple files. This shift is becoming more standardized through protocols like the Agent Client Protocol (ACP) and platforms like OpenHands, which help developers manage and audit different agents from central hubs like GitHub’s Agent HQ.

Conclusion: Agents will make developers more productive

The shift from token-level suggestions to autonomous cooperation represents a change in the developer’s role, from writing code to orchestrating workflows. Future development will likely rely on hybrid workflows that combine fast code completions with the task-level autonomy of agents. Looking forward, teams should experiment with agentic systems in limited settings while refining the goals and prompting strategies that guide them. Measuring their effects on existing delivery cycles will provide a data-driven basis for integrating these systems into the broader software development lifecycle.

- Code completion tools optimize for local productivity by accelerating typing, syntax recall, and small refactors

- As codebases grow and tasks span multiple files, tests, and systems, developers increasingly spend time coordinating work rather than writing code.

- Agentic coding is a response to this coordination bottleneck, aiming to offload planning and execution of multi-step tasks.

- Recent research of 20 Python-proficient developers indicates that coding for, instead of with (i.e. agentic coding) significantly cuts user effort by 50%, but requires more proverbial babysitting so the wall-clock time is still similar.

Get useful insights in our blog

Insights and updates from the OpenHands team

Thank you for your submission!

OpenHands is the foundation for secure, transparent, model-agnostic coding agents - empowering every software team to build faster with full control.

%201.svg)